“The Compositional Architecture of the Internet”

Communications of the ACM, March 2019, Vol. 62 No. 3, Pages 78-87

Contributed Articles

By Pamela Zave, Jennifer Rexford

“For the past 25 years, the Internet has been evolving to meet new challenges by composition with new networks that were unanticipated by the original architecture. New networks make alternative trade-offs that are not compatible with the general-purpose Internet design, or maintain alternative views of network structure.

In an architecture composed of self-contained networks, each network is a microcosm with all the basic network mechanisms including a namespace, routing, a forwarding protocol, session protocols, and directories. The mechanisms are specialized for the network’s purposes, membership, geographical span, and level of abstraction.”

In 1992, the explosive growth of the World Wide Web began. The architecture of the Internet was commonly described as having four layers above the physical media, each providing a distinct function: a “link” layer providing local packet delivery over heterogeneous physical networks, a “network” layer providing best-effort global packet delivery across autonomous networks all using the Internet Protocol (IP), a “transport” layer providing communication services such as reliable byte streams (TCP) and datagram service (UDP), and an “application” layer. In 1993, the last major change was made to this classic Internet architecture;11 since then the scale and economics of the Internet have precluded further changes to IP.

A lot has happened in the world since 1993. The overwhelming success of the Internet has created many new uses and challenges that were not anticipated by its original architecture:

- Today, most networked devices are mobile.

- There has been an explosion of security threats.

- Most of the world’s telecommunication infrastructure and entertainment distribution has moved to the Internet.

- Cloud computing was invented to help enterprises manage the massive computing resources they now need.

- The IPv4 32-bit address space has been exhausted, but IPv6 has not yet taken over the bulk of Internet traffic.

- In a deregulated, competitive world, network providers control costs by allocating resources dynamically, rather than provisioning networks with static resources for peak loads.

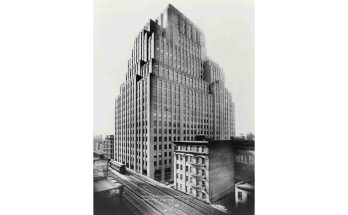

Here is a conundrum. The Internet is meeting these new challenges fairly well, yet neither the IP protocol suite nor the way experts describe the Internet have changed significantly since 1993. Figure 1 shows the headers of a typical packet in the AT&T backbone, giving us clear evidence that the challenges have been met by mechanisms well outside the limits of the classic Internet architecture. In the classic description, the only headers between HTTP and Ethernet would be one TCP header and one IP header.

In this article, we present a new way of describing the Internet, better attuned to the realities of networking today, and to meeting the challenges of the future. Its central idea is that the architecture of the Internet is a flexible composition of many networks—not just the networks acknowledged in the classic Internet architecture, but many other networks both above and below the public Internet in a hierarchy of abstraction. For example, the headers in Figure 1 indicate the packet is being transmitted through six networks below the application system. Our model emphasizes the interfaces between composed networks, while offering an abstract view of network internals, so we are not reduced to grappling with masses of unstructured detail. In addition, we will show that understanding network composition is particularly important for three reasons:

Reuse of solution patterns: In the new model, each composable network is a microcosm of networking, with the potential to have all the basic mechanisms of networking such as a namespace, routing, a forwarding protocol, session protocols, and directories. Our experience with the model shows this perspective illuminates solution patterns for problems that occur in many different contexts, so that the patterns (and their implementations!) can be reused. This is a key insight of Day’s seminal book Patterns in Network Architecture. By showing that interesting networking mechanisms can be found at higher levels of abstraction, the new model helps to bridge the artificial and unproductive divide between networking and distributed systems.

Verification of trustworthy services: Practically every issue of Communications contains a warning about the risks of rapidly increasing automation, because software systems are too complex for people to understand or control, and too complex to make reliable. Networks are a central part of the growth of automation, and there will be increasing pressure to define requirements on communication services and to verify they are satisfied. As we will show, the properties of trustworthy services are defined at the interfaces between networks, and are usually dependent on the interaction of multiple networks. This means they cannot be verified without a formal framework for network composition.

Evolution toward a better Internet: In response to the weaknesses of the current Internet, many researchers have investigated “future Internet architectures” based on new technology and “clean slate” approaches. These architectures are not compatible enough to merge into one network design. Even if they were, it is debatable whether they could satisfy the demands for specialized services and localized cost/performance trade-offs that have already created so much complexity. A study of compositional principles and compositional reasoning might be the key to finding the simplest Internet architecture that can satisfy extremely diverse requirements.

We begin with principles of the classic architecture, and then discuss why they have become less useful and how they can be replaced. This should help clarify that we are proposing a really new and different way of talking about networks, despite the familiarity of the terms and examples. We close by considering potential benefits of the new model.

About the Authors:

Pamela Zave is a researcher in the Department of Computer Science at Princeton University, Princeton, NJ, USA.

Jennifer Rexford is the Gordon Y.S. Wu Professor of Engineering in the Department of Computer Science at Princeton University, Princeton, NJ, USA.